Face analysis based on deep learning

With the advancement of deep learning technology, the performance of face analysis has also improved.

I will introduce a high-performing open-source face analysis library and explain how to use it.

InsightFace: 2D and 3D Face Analysis Project

https://github.com/deepinsight/insightface

※ License : Please refer to the above site !

Here, we share various models, and I would like to introduce the buffalo_l model pack.

buffalo_l provides blob box, key points, detection score, landmark 2D/3D, gender, age, embedding, and pose information.

I will demonstrate the process of face analysis using Python.

Python package install

Please install the following packages.

(You do not need to install packages that are already installed.)

- pip install numpy

- pip install opencv-python

- pip install pillow

- pip install insightface

- pip install onnxruntime

Model download

Download the model through the following link.

- Download link :

buffalo_l download

Unzip the downloaded file in the directory where the Python source code is located.

The tree structure should be as follows.

├─ source_code.py

├─ checkpoints

└─ models

└─ buffalo_l

└─ 1k3d68.onnx

└─ 2d106det.onnx

└─ det_10g.onnx

└─ genderage.onnx

└─ w600k_r50.onnx

Python code

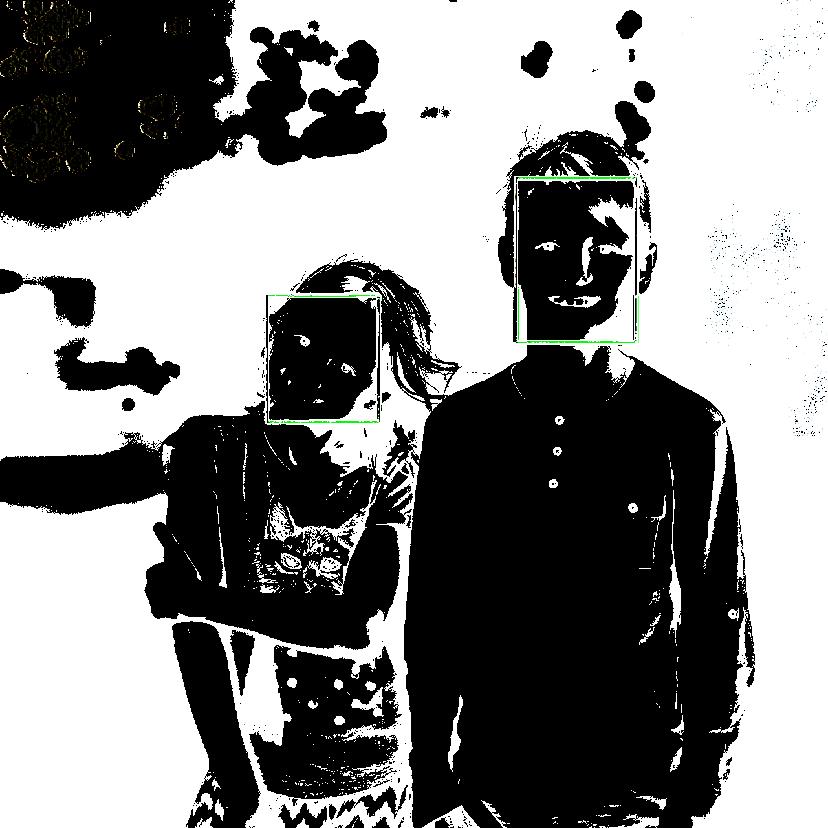

The following code identifies faces and draws rectangles around them.

The information about the detected faces is contained in faces.

It can detect multiple faces, and since faces is a list, you can access each face's information using an index.

import cv2

import insightface

import onnxruntime

import numpy as np

from PIL import Image

providers = onnxruntime.get_available_providers()

face_analyser = insightface.app.FaceAnalysis(name="buffalo_l", root="./checkpoints", providers=providers)

det_size=(320, 320)

face_analyser.prepare(ctx_id=0, det_size=det_size)

img = Image.open('face.jpg')

img = cv2.cvtColor(np.array(img), cv2.COLOR_RGB2BGR)

faces = face_analyser.get(img)

print(faces)

if len(faces) >= 1:

for face in faces:

x1 = round(face['bbox'][0])

y1 = round(face['bbox'][1])

x2 = round(face['bbox'][2])

y2 = round(face['bbox'][3])

color_bgr = (0, 255, 0)

box_thickness = 3

cv2.rectangle(img, (x1, y1), (x2, y2), color_bgr, box_thickness)

cv2.imwrite('output.jpg', img)

else:

print('Face not found.')

I ran the code and it drew a square box around the face like this.

In the code above, the face contains the following nine types of analysis information.

face['bbox']: Blob box (Square box face position)face['kps']: Key points (Eyes, Nose, Lips location)face['det_score']: Detection score (Score indicating the likelihood that the detected object is a face)face['landmark_2d_106']: face landmark 3dface['landmark_3d_68']: face landmark 3d (Dots representing the eyes, eyebrows, nose, mouth, and facial contours)face['pose']: Pitch, Yaw, Roll (Tilting the face up/down, rotating left/right, tilting left/right)face['gender']: Genderface['age']: Ageface['embedding']: embedding vector

Since we have already checked the bbox, let's look at the remaining eight.

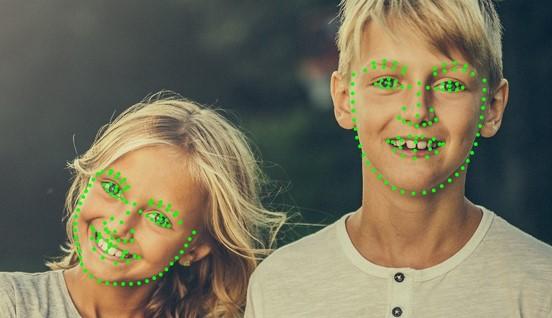

kps (Key points)

kps indicates the positions of the eyes, nose, and mouth.

When dots are placed at the coordinates of kps, they are represented as follows.

det_score (Detection score)

The det_score represents the likelihood that the detected object is a face.

It has a real-number value between 0 and 1, with values closer to 1 indicating a higher probability that the object is a face.

landmark_2d_106

These are points representing the eyes, eyebrows, nose, mouth, and facial outline in a 2D coordinate system.

Here is how they are represented.

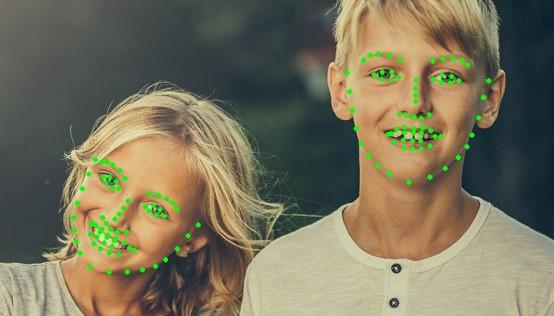

landmark_3d_68

These points represent the eyes, eyebrows, nose, mouth, and facial contours in a 3D coordinate system.

They are expressed as follows.

pose

This indicates the Pitch, Yaw, and Roll information of a face.

- Pitch: Tilt of the face up or down (positive: up, negative: down)

- Yaw: Rotation of the face left or right (positive: left, negative: right)

- Roll: Tilt of the face left or right (positive: left, negative: right)

gender

Here is the predicted gender.

age

Here is the estimated age.

embedding

This is a unique 512-dimensional vector for a face.

To determine if two faces belong to the same person, you can calculate the similarity between the two facial embedding vectors.

For example, by calculating the cosine similarity, the closer it is to 1, the more likely it is that the faces are of the same person.